In the following sections we introduce the basic classes and algorithms that are used to connect a haptic device to a virtual world.

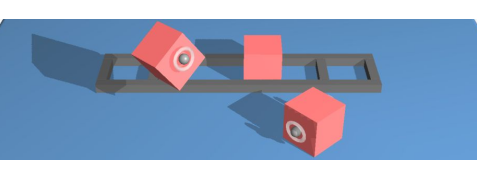

A tool is a 3D object that is used to connect, model, and display a haptic device in a virtual world. A tool is modelled graphically by one or more contact spheres called haptic points (cHapticPoint) that model the interactions between the haptic device and the environment. The simplest tool (cToolCursor) uses a single sphere, whereas a gripper tool (cToolGripper) uses two or more contact points to model a grasping-like interaction (thumb and finger).

A set of force rendering algorithms (cAlgorithmFingerProxy and cAlgorithmPotentialField) that compute the interaction forces between the haptic point and the environment is associated with each haptic point (cHapticPoint). The type of object encountered determines which of the force rendering algorithms is used.

Once all contact forces have been computed at each haptic point of the tool, the resulting forces are combined together and converted into a force, torque, and gripper force that are sent to the haptic device.

The following code illustrates how to create a single point contact tool (cursor) and connect it to a haptic device.

Once a tool has been instantiated, we create a haptic thread that continuously reads the position of haptic device, computes the interaction forces between the tool and the environment, and send the computed forces back to the haptic device. If any additional behaviours need to be modelled (e.g. picking or moving an object), these are generally implemented in the same haptics loop. To get a better understanding of these features, we invite you to explore the different CHAI3D examples.

When the physical workspace of the haptic device differs from the workspace size of the virtual world, a scale factor must be introduced. The scale factor is programmed by assigning a desired workspace size to the tool.

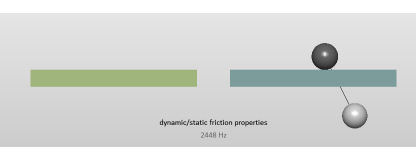

As haptic devices differ from one and other (e.g. size, range of forces, maximum closed loop stiffness), it is important to consult their physical characteristics before assigning haptic properties to the virtual environment to prevent instabilities in the haptic rendering.

The following code sample illustrates how the material stiffness property of an object is programmed according to the specifications of the haptic device. In this example, we take into account the maximum closed loop stiffness supported by the haptics device and the workspace scale factor between the tool and the device.

As illustrated in the above figure, a haptic tool is composed of a set of haptic points (contact spheres) that model the interaction forces between the tool and the environment. When a tool computes the interaction forces, the rendering algorithm loops through every haptic point and computes all local contributions independently.

The methodologies that are used to compute the interaction forces at these points are called the force rendering algorithms. When the interaction forces are computed for a given tool, each haptic point parses the entire world (or scenegraph) for each algorithm and computes these forces. CHAI3D currently implements two force models, namely the potential field and the finger-proxy models.

Potential fields are easy to implement and require a small amount of CPU to operate. On the flip side, they do have limitations which have to be handled with care to avoid unwanted artefacts or instabilities (e.g. pop-through effects across thin object, discontinuities at corners, etc...) The finger-proxy addresses many of these problems, but at the cost of a more computationally expensive collision detection algorithms. More information about these algorithms are presented in the following subsections.

In practice, both approaches can be combined together to create rich environments while still maintaining the real-time performances needed to guaranty a stable behaviour of the haptic device.

Additional haptic effects can also be activated and adjusted through the material property class. Haptic effects use potential field algorithms which are also supported on any generic shape object. Note that the magnetic effect is not currently supported on mesh objects.

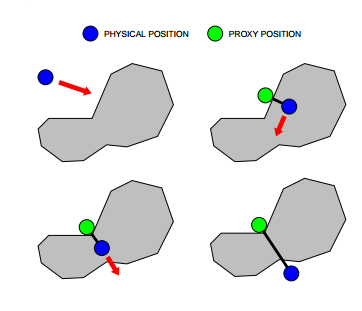

To haptically render mesh objects, CHAI3D uses a virtual "finger-proxy" algorithm developed by Ruspini and Khatib, similar to the "god-object" proposed by Zilles and Salisbury. The virtual proxy is a representative object that substitutes for the physical finger or probe in the virtual environment.

The above figure illustrates the motion of the virtual proxy, as the haptic devices's position is altered. The motion of the proxy attempts to always move toward a goal. When unobstructed, the proxy moves directly towards the goal. When the proxy encounters an obstacle, direct motion is not possible, but the proxy may still be able to reduce the distance to the goal by moving along one or more of the constraint surfaces. The motion is chosen to locally minimize the distance to the goal. When the proxy is unable to decrease its distance to the goal, it stops at the local minimum configuration. Forces are computed by modelling a virtual spring between the position of the haptic device and the proxy. The stiffness of the spring is defined in the material property.

Every time a haptic point encounters an object with an activated haptic effect, an interaction event is returned. Each interaction event reports a reference to the object, the position of the tool at time of interaction, and the computed force.

Every time a contact between the proxy and and object occurs, a collision event is reported. A collision event contains useful information about the object, contact position, surface normal, etc...

The following example shows how to read the contact events from a haptic point.