Volume rendering is essential to scientific and engineering applications that require visualization of three-dimensional data sets. Examples include visualization of data acquired by medical imaging devices or resulting from computational fluid dynamics simulations. Users of interactive volume rendering applications rely on the performance of modern graphics accelerators for efficient data exploration and feature discovery.

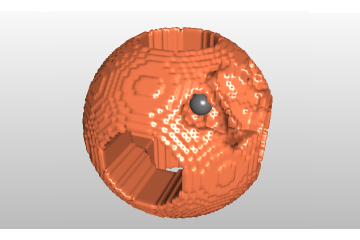

A voxel represents a value on a regular grid in three-dimensional space. Voxel is a portmanteau for "volume" and "pixel" where pixel is a combination of "picture" and "element". As with pixels in a bitmap, voxels themselves do not typically have their position (their coordinates) explicitly encoded along with their values. Instead, the position of a voxel is inferred based upon its position relative to other voxels (i.e., its position in the data structure that makes up a single volumetric image). In contrast to pixels and voxels, points and polygons are often explicitly represented by the coordinates of their vertices. A direct consequence of this difference is that polygons are able to efficiently represent simple 3D structures with lots of empty or homogeneously filled space, while voxels are good at representing regularly sampled spaces that are non-homogeneously filled.

Voxels are frequently used in the visualization and analysis of medical and scientific data. Some volumetric displays use voxels to describe their resolution. For example, a display might be able to show 512x512x512 voxels.

Volume objects are implemented in CHAI3D with a class called cVoxelObject, where the volume data is stored into memory as a 3D texture (cTexture3D). In the following code we illustrate how to setup such object and program the individual voxels.

To edit the content of the voxel data, you can use the following method:

After the data has been modified (one or more voxel), make sure to always flag the texture for update from CPU to GPU memory.

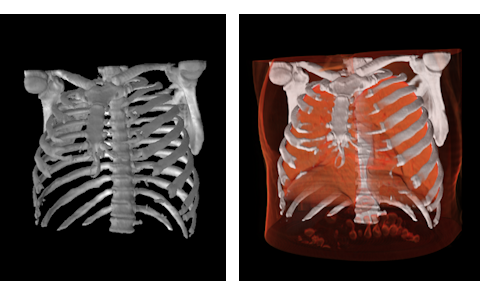

Volume models can also be constructed by loading into memory a stack of 2D images.

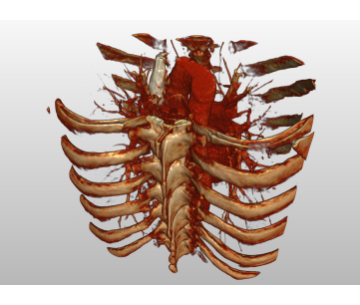

If your volume images are in gray scale level (CT, MRI data), you can use a transfer function to colorize the data. The role of the transfer function is to emphasize features in the data by mapping values and other data measures to optical properties. The simplest and most widely used transfer functions are one dimensional, and they map the range of data values to color and opacity. Typically, these transfer functions are implemented with 1D texture lookup tables.